The Defense Advanced Research Projects Agency (DARPA) held its third Urban Challenge event on 3 November 2007. The Urban Challenge is designed to accelerate the development of autonomous ground vehicle technology for future use in military and urban applications.

To be competitive for the prizes, the robotic vehicles must demonstrate they can complete the 88 km course in less than 6 hours while driving safely and obeying all California traffic laws. The vehicles face driving challenges such as traffic circles, merges, four-way intersections, blocked roads, parking in a crowded lot, passing parked cars on narrow streets, and keeping up with the traffic flow on two and four lane roads. From the time the robotic vehicle leaves the start chute and begins the course it is entirely under the control of its onboard mission computer, human observers may intervene only for purposes of safety.

1st prize to Tartan Racing

A self-driving SUV, called Boss, made history by driving swiftly and safely while sharing the road with human drivers and other robots. The feat earned Carnegie Mellon University's Tartan Racing first place in the DARPA Urban Challenge, which pitted 11 autonomous vehicles against each other on a course of urban roadways.

After reviewing judges' scorecards, DARPA officials concluded that Boss, a robotised 2007 Chevy Tahoe, followed California driving laws as it navigated the course and that it operated in a safe and stable manner. Many of the robotic vehicles made good decisions, which meant speed became the determining factor and Boss was the fastest of the competitors by a large margin. Boss averaged about 22 km/h an hour over an approximate distance of 88 km, finishing the course nearly 20 minutes ahead of second placed Stanford.

Spinning laser is the real winner

Getting first place in a car race is great, but finishing in 1st, 2nd and 4th places is unique: Velodyne did just that. The company's spinning laser sensor was used by many of the top teams in the robotic car challenge. The new HDL-64E LIDAR (light detection and ranging) sensor was fitted to the vehicles of seven of the 11 finalists.

What is LIDAR?

The use of light pulses to measure distance is well known. The basic concept is to first emit a light pulse, typically using a laser (light emission by stimulated emission of radiation) diode. The light pulse travels until it strikes a target. A portion of the light energy is then reflected back towards the emitter. A detector mounted near the emitter detects this return signal, and the time difference between the emitted and detected pulse determines the distance of the target. LIDAR expands the possibilities by using rotating mirrors to transmit pulses in two or three dimensions, enabling an overview of terrain topography to be established. It is used in a variety of industries, including atmospheric physics, geology, forestry and oceanography. LIDAR used in mobile environments like aircraft and satellites also incorporates positioning technology (GPS) to provide an absolute position reference. LIDAR is similar to radar, but it incorporates laser pulses rather than radio waves.

LIDAR - A technology overview

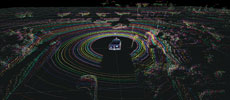

When the pulsed distance measuring equipment is actuated, multiple beams called the point cloud are established. A target within the point cloud appears as a notch. The distance, width and height of the target can be determined from the characteristics of the notch. Combining the characteristics of notches in the point cloud enables an image of the surrounding terrain to be formed. The denser the point cloud the richer the image becomes.

However, the needs for autonomous vehicle navigation, mobile surveying and high-speed image capture place unrealistic demands on current systems. There are numerous systems that take excellent pictures, but take several minutes to collect a single image. Such systems are unsuitable for mobile sensing applications.

In the arena of autonomous navigation it is generally accepted that human response time is in the order of several tenths of a second. Therefore, it is realistic to provide the navigation computer with a complete fresh update minimally 10 times a second. The vertical field of view needs to extend above the horizon, in case the car enters a dip in the road, and should extend down as close as possible to see the ground in front of the vehicle to accommodate dips in the road surface or steep declines. Other mobile sensing applications beyond autonomous navigation also suffer from a lack of sensor data density and frame rates, given the vast amounts of area to be captured.

HDL - 64E Product Description

Velodyne has developed and produced a patented High Definition Lidar sensor, the HDL-64E is designed to satisfy the demands of mobile sensing applications. The unit rotates by default at 600 rpm (10 Hz), but can be instructed to run between 300 and 900 rpm. 600 rpm produces a 10 Hz frame rate, providing one million distance points per second. This data can then be captured using a standard Ethernet packet capture utility such as Wireshark, or can be processed by an autonomous navigation system to create a map that can be used to identify obstacles, find drivable road, and ultimately determine steering, braking and acceleration requirements.

For more information contact Velodyne, +1 408 465 2800, [email protected], www.velodyne.com/lidar

© Technews Publishing (Pty) Ltd | All Rights Reserved